General concepts

MLReef is an MLOPs platform focused on collaboration. In MLReef we aim to integrate all required value added steps in one platform.

Machine Learning is based on the convergence of two major elements, data and code with the goal to enable automated information processing. This very general concept provides our guidelines to create meaningful features with an underlying focus on collaboration between teams and throughout the entire MLReef community.

The following links provide documentation for each MLOps stage:

| Concepts | Documentation for |

|---|---|

| Data vs code repositories | Types of GIT repositories. |

| Data pipeline types | Data pipelines and experiments. |

| Publishing code repositories | Accessing code repositories in pipelines. |

| Marketplace | All about discoverability of community content. |

Data vs code repositories

You will find two different types of git repositories:

- Data repositories: For hosting ML projects.

- Code repositories: For hosting data operators.

Data repositories host ML projects

We like to call them data repositories because

- they host the data and data pipelines to create ML models and

- since they are as standard based on git lfs.

You can create or access existing projects through ML projects.

The goal within data repositories is to cover the actual value added steps in creating and deploying a ML model.

In the next chapter we will talk about the different data pipelines existing in data repositories.

Data pipeline types

In MLReef you can find three different types of data pipelines:

- Data visualization pipelines

- Data processing pipelines (called DataOps)

- Experiment pipelines

All these are found within ML projects (data repositories), where you can use your data for processing.

You can view more detailed information about each pipeline in the corresponding section:

| Pipeline types | Documentation for |

|---|---|

| Data visualization | Pipeline to create data visualizations. |

| DataOps | Pipeline for automated processing of your data. |

| Experiments | Pipeline to create ML models. |

Publishing code repositories

As described in the chapter Data vs code repositories, there exist two types of repositories for ML projects (data) and code (i.e. python scripts).

Within a ML project you can executing data pipelines. The elements of each pipeline is:

- data (stored in data repositories)

- code (stored in code repositories)

To make a code repository (i.e. script) available in a data pipeline, one must first publish the code repository. Publishing means, that a code repository will either be publicly or privately (dependent on the visibility level of the repository) available within the corresponding pipeline:

- Scripts from DataOps repositories will be available in the DataOps pipeline.

- Scripts from Data Visualization repositories will be available in the Data Visualization pipeline.

- Scripts from Model repositories will be available in the Experiment pipeline.

The concept of data vs code repositories is to separate concerns and to have full reproducibility and easy accessability.

Here you can find a detailed chapter for publishing.

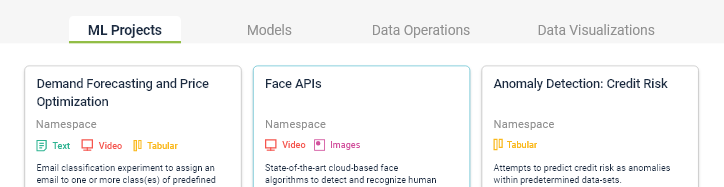

Marketplace

Independent of any publishing process as described above, all repositories (data and code) are accessible and discoverable in the marketplace.

The major differentiations are:

Repository type

A repository can either be found in:

- ML Projects = data repositories

- Models = code repositories

- Data Ops = code repositories

- Data Visualizations = code repositories

Visibility level

When creating a new project (e.g. ML project, a Model) you can select the visibility - either as private or as public.

Private: These repositories are only accessible by you or by your project members. Public: These repositories are accessible to everyone.

Ownership

Projects can either be external (not owned by you) or owned by you or your group. You will find owned projects under the My xxx tab, wheras not owned projects can be found in the Explore tab.

You can take ownership of an external project by forking the project.